When Kubernetes Isn't the Answer: Right-Sizing Your Cloud Architecture

Teams pick Kubernetes before they understand their problem. This creates unnecessary complexity. I've seen simple apps that would work perfectly on Azure App Service struggle with container orchestration nightmares. Requirements should drive platform choice, not trends.

I've spent the last ten years at Microsoft helping Fortune 500 companies architect their cloud solutions. In that time, I've seen the same mistake repeated countless times: teams pick their platform before they understand their problem.

The conversation usually starts the same way. "We need Kubernetes,". When I ask why, the answers vary. "Everyone's using it." "We want to be cloud-native." "Our developers want container orchestration." What I rarely hear is a clear explanation of their actual requirements.

This backwards approach—choosing tools first, then figuring out how to use them—creates unnecessary complexity. It leads to operational nightmares and failed projects. Most importantly, it wastes time and money solving problems you don't have.

Requirements First, Tools Second

The right way to pick a platform is simple: understand your workload, know your team, then choose your tools. Not the other way around.

Start with your application. What does it actually do? How much traffic does it handle? Does it need to scale up and down quickly? Is it stateful or stateless? Does it process events, serve web traffic, or run batch jobs?

Next, look at your team. How many people will operate this system? What's their experience level? Can they handle complex operational tasks, or do they need something simpler? Are they ready to become Kubernetes experts, or would they rather focus on business logic?

Finally, consider your constraints. What's your budget? How much operational overhead can you accept? Do you have compliance requirements? How much downtime can you tolerate?

These questions matter because the wrong platform choice creates problems that compound over time. A small team trying to run Kubernetes will spend more time fighting infrastructure than building features. A simple application running on an enterprise platform will cost more than it should.

I learned this lesson early in my career at Microsoft. Working with the Azure Global Customer Success Unit, I helped companies migrate their workloads to the cloud. The successful projects started with requirements. The failed ones started with technology choices.

One client insisted on running their simple web application on Kubernetes because they'd heard it was "the future." Six months later, they were spending 80% of their development time on platform issues. A simple Azure App Service would have solved their problem in days, not months.

The principal applies beyond Kubernetes. Teams choose complex databases when they need simple storage. They build microservices when a monolith would work better. They use event-driven architectures for applications that don't need them.

Technology decisions should solve business problems, not create new ones. When you start with requirements, you can make choices that actually help your team deliver value. When you start with tools, you often end up with solutions looking for problems.

This isn't about avoiding new technology. It's about picking the right tool for the job. Sometimes that tool is Kubernetes. Sometimes it's something much simpler.

The Kubernetes Update Trap

Here's a scenario I've seen too many times: a team runs their application on Kubernetes for months without problems. Then their hosting provider announces a mandatory cluster update. Suddenly, their application breaks.

The team panics. They don't understand why the update broke their system. They can't fix it quickly because they treat Kubernetes as a black box. Worse, they discover they can't rollback because the hosting provider has already moved to the new version.

I've watched teams beg their cloud providers to delay updates. I've seen companies pay extra for extended support on outdated versions. Some teams rewrite their applications just to avoid dealing with platform changes.

This nightmare happens because teams think Kubernetes is just infrastructure. They deploy their applications and assume everything will keep working. But Kubernetes is a complex system with its own release cycle, breaking changes, and operational requirements.

The problem gets worse with managed services. Teams think "managed Kubernetes" means "no operational burden." It doesn't. Managed services handle the control plane, but you still own everything that runs on it. When the platform changes, your applications need to adapt.

Consider what happens during a typical cluster update. The Kubernetes API might deprecate certain resources. Network policies could change. Storage classes might get updated. Security contexts could tighten. Each change can break applications that depend on the old behavior.

Teams that understand Kubernetes see these updates coming. They test their applications against new versions. They update their manifests before the migration deadline. They plan for changes and adapt accordingly.

Teams that treat Kubernetes as infrastructure get surprised. They don't test against new versions because they don't know how. They don't read release notes because they assume someone else is handling updates. When things break, they don't have the skills to fix them.

The operational burden extends beyond updates. Kubernetes requires ongoing maintenance. You need to monitor resource usage, manage networking, handle storage, and configure security. You need to understand how your applications interact with the platform.

Even managed Kubernetes services shift this burden rather than eliminate it. Yes, your cloud provider handles control plane updates. But you still need to manage your workloads, understand the platform, and adapt to changes.

This creates a hidden cost that many teams miss during platform selection. They see the compute and storage costs but ignore the operational overhead. They budget for servers but not for the expertise needed to run them.

The worst part is that teams often choose Kubernetes for workloads that don't need it. They accept all this complexity for applications that would run perfectly on simpler platforms. They solve problems they don't have while creating problems they can't handle.

The update trap catches teams because they make platform decisions without understanding the full cost. They see Kubernetes as a way to deploy containers, not as a complex distributed system that requires ongoing expertise.

When Simple Beats Complex

Most applications don't need Kubernetes. They need a way to run code reliably and cost-effectively. Simpler platforms often deliver better results with less effort.

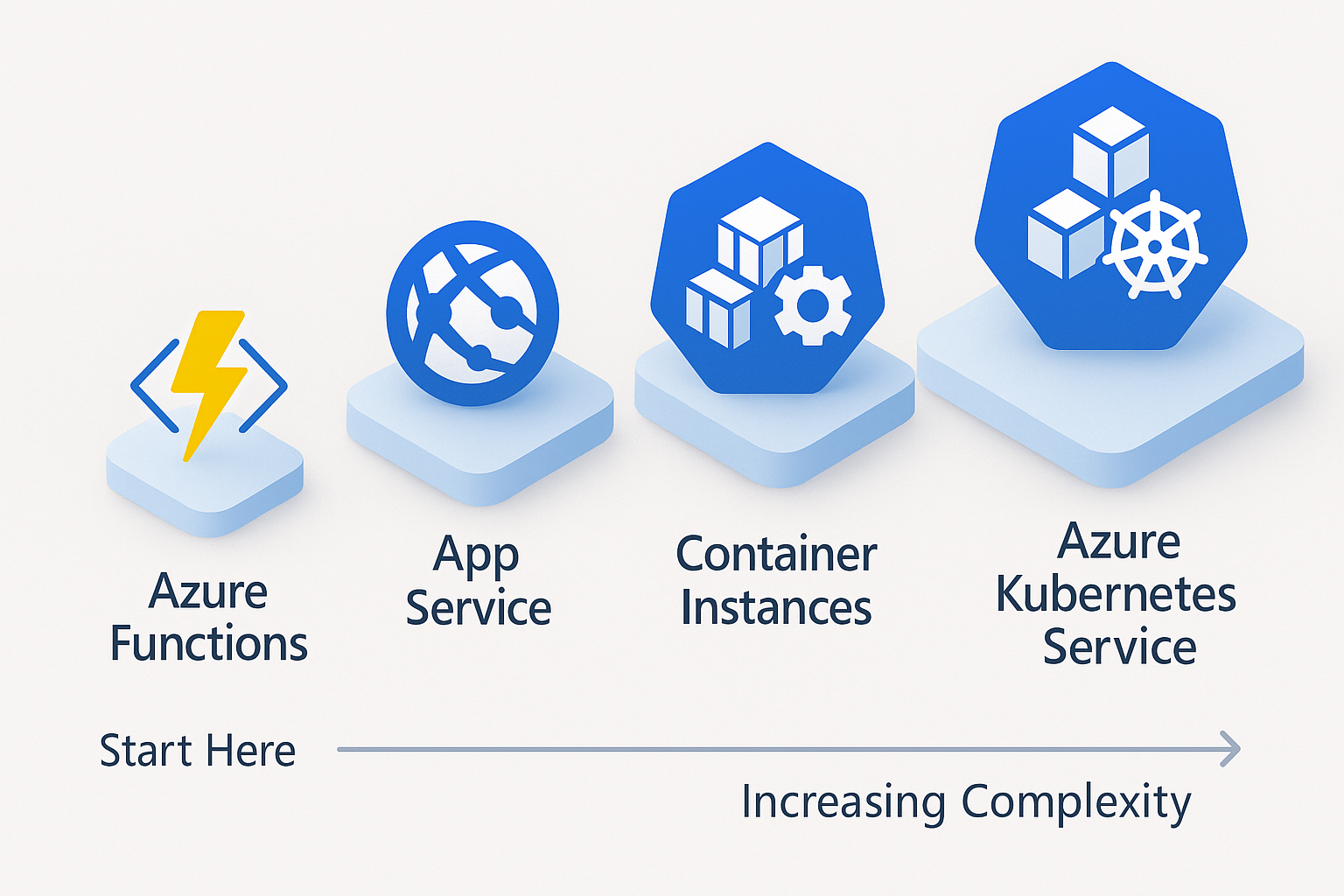

Take event-driven workloads. Azure Functions handles events without any infrastructure management. You write code that responds to triggers. The platform scales automatically, bills by usage, and handles all the operational details.

Compare that to running the same workload on Kubernetes. You need to build container images, write deployment manifests, configure auto-scaling, set up monitoring, and handle updates. You're solving infrastructure problems instead of business problems.

I worked with a manufacturing company that processed transaction events. They initially planned to use Kubernetes because they wanted "microservices architecture." After reviewing their requirements, we moved them to Azure Functions. Their processing latency dropped, their costs decreased, and their team could focus on business logic instead of platform management.

Web applications show the same pattern. Azure App Service runs web apps without container orchestration. You deploy your code and get automatic scaling, health monitoring, and integrated CI/CD. The platform handles security patches, load balancing, and backup.

A Kubernetes deployment for the same application requires significantly more work. You need ingress controllers, service meshes, certificate management, and monitoring solutions. You're building a platform instead of an application.

Container workloads don't automatically need orchestration. Azure Container Instances runs containers without cluster management. You get isolated containers with guaranteed resources and simple networking. Perfect for batch jobs, build tasks, or simple services that don't need complex scheduling.

The pattern holds across cloud providers. AWS Lambda competes with Functions for event processing. Google Cloud Run offers similar container hosting without orchestration. These platforms solve real problems with less complexity.

The key insight is matching platform capabilities to actual requirements. Event-driven workloads benefit from serverless platforms. Simple web applications work well with platform services. Batch jobs often need basic container hosting.

Kubernetes excels at complex orchestration scenarios. Multiple interdependent services that need sophisticated scheduling, networking, and resource management. Applications that require custom operators or complex deployment patterns. Workloads that need fine-grained control over the runtime environment.

But these scenarios are less common than teams think. Most applications have straightforward requirements that simpler platforms handle better. They need reliable hosting, not complex orchestration.

The simplicity advantage compounds over time. Simpler platforms require less expertise, reduce operational overhead, and let teams focus on features instead of infrastructure. They often cost less and deliver better reliability.

This doesn't mean avoiding containers or modern deployment practices. It means choosing the right level of abstraction for your needs. Sometimes that's Kubernetes. Often it's something simpler.

Making the Right Choice

Platform selection should be systematic, not emotional. Here's how to make decisions that actually help your team.

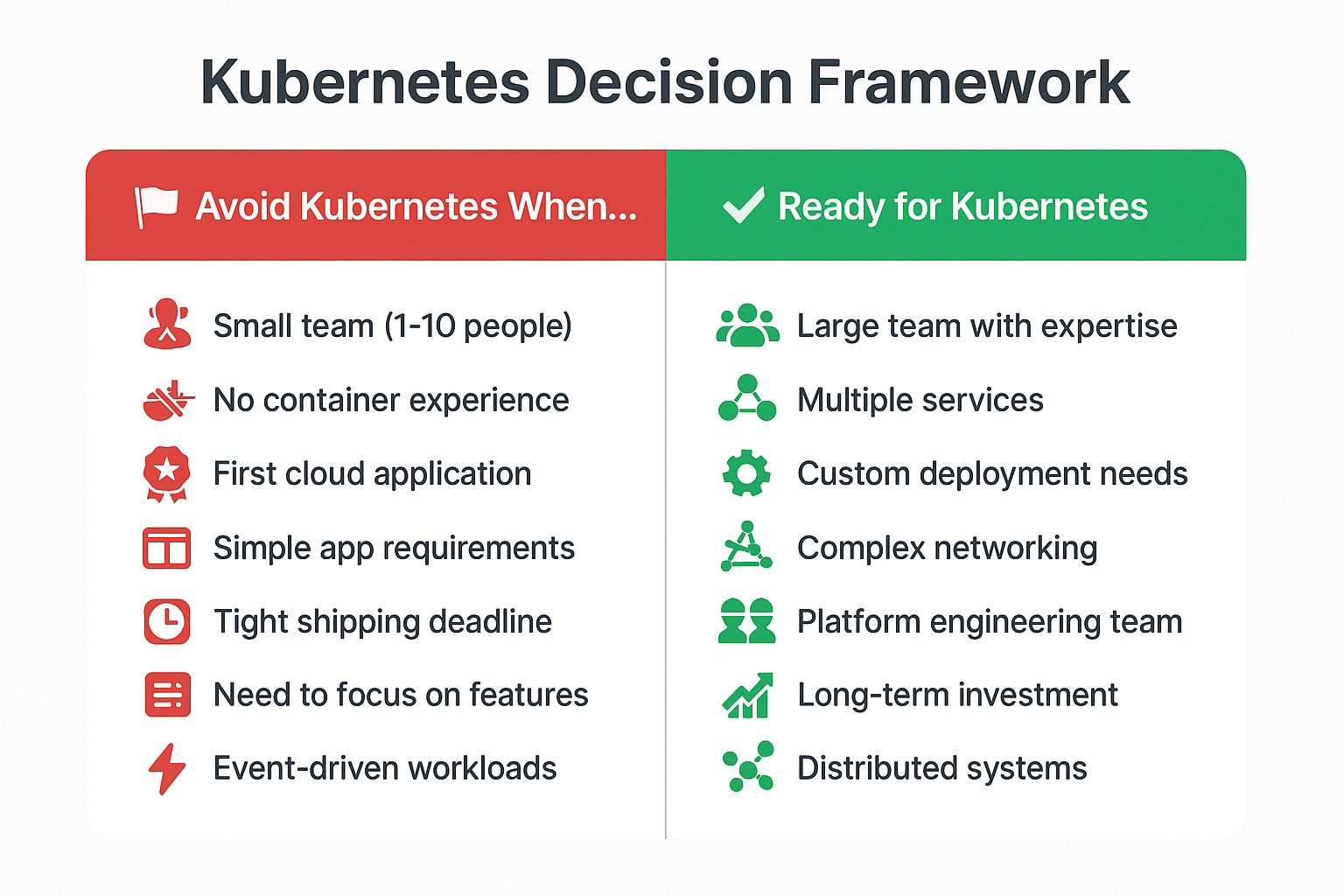

Start with team size and capability. Small teams (fewer than 10 people) rarely have the bandwidth for complex platforms. They need solutions that work out of the box with minimal configuration. Large teams (50+ people) can justify the investment in platform expertise.

Medium teams (10-50 people) face the hardest choices. They're too small to dedicate people to platform management but too large for simple solutions. They often benefit from managed services that provide Kubernetes capabilities without full operational responsibility.

Consider your application patterns. Simple request-response applications work well on App Service or similar platforms. Event-driven architectures benefit from serverless functions. Complex distributed systems might need Kubernetes for proper orchestration.

Look at your scaling requirements. Do you need to scale from zero to thousands of requests? Serverless platforms handle this better than container orchestration. Do you need predictable performance under consistent load? Traditional hosting might work better than dynamic scaling.

Evaluate your operational maturity. Can your team handle cluster upgrades, network troubleshooting, and resource optimization? Do they understand container lifecycle management, security contexts, and storage orchestration? If not, simpler platforms will serve you better.

Consider your compliance requirements. Some industries need specific security controls or audit capabilities. Kubernetes provides fine-grained control but requires expertise to configure correctly. Managed platforms often provide compliance features out of the box.

Think about your timeline. How quickly do you need to deliver? Simple platforms let you ship faster. Complex platforms require more upfront investment but might provide better long-term flexibility.

Here are red flags that point away from Kubernetes: your team has no container experience, you're building your first cloud application, you have a single application with simple requirements, you can't dedicate resources to platform learning, or you need to ship in the next few months.

Green flags for Kubernetes: you have multiple interdependent services, you need custom deployment patterns, you have platform engineering expertise, you're building a platform for other teams, or you have complex networking requirements.

The decision shouldn't be permanent. You can start simple and move to complex platforms as your needs grow. Many successful companies began with basic hosting and migrated to Kubernetes as their requirements evolved.

The key is being honest about your current situation. Don't choose platforms based on where you want to be. Choose based on where you are today and what you need to accomplish.

Getting It Right

The best architecture decisions start simple and grow into complexity as needed. This approach lets you deliver value quickly while building the expertise to handle more sophisticated platforms.

Begin with the simplest solution that meets your requirements. If Azure Functions can handle your workload, start there. If App Service works for your web application, use it. You can always migrate to more complex platforms later.

Build operational skills before adding operational burden. Learn how your applications behave in production. Understand their scaling patterns, failure modes, and resource requirements. This knowledge will help you make better platform decisions in the future.

Most importantly, remember that platform choice is just one decision among many. The best platform is the one that lets your team focus on solving business problems instead of infrastructure problems.

Your architecture should serve your goals, not the other way around. Sometimes that means choosing boring technology that just works. Sometimes it means investing in complex platforms for future flexibility. The key is making conscious decisions based on real requirements, not industry trends.

In my experience helping companies architect their cloud solutions, the most successful teams are pragmatic about technology choices. They pick tools that help them deliver value, not tools that look good on their resumes. That's how you build systems that actually work.